As the rapid advances in AI continue and even accelerate, businesses everywhere are adapting their infrastructure to take advantage of these new technologies. FuriosaAI partnered with ClearML and the AI Infrastructure Alliance to survey AI and technology leaders at 1,000 companies across North America, Europe, and Asia in order to learn about their AI infra plans, priorities, and key concerns. More than half of the companies represented had more than 10,000 employees; the rest had between 500 and 10,000. The most common titles among respondents were Chief Technology Officer, Head of Data Science, and VP of Artificial Intelligence.

The full report, titled “The State of AI Infrastructure at Scale 2024: Unveiling Future Landscapes, Key Insights, and Business Benchmarks,” is available now. This blog post summarizes the most noteworthy insights.

FuriosaAI worked with ClearML to conduct this global survey because we are committed to helping businesses gain access to cutting edge AI hardware that addresses their real-world needs – including power efficiency, ease of use, and performance. Our Gen 1 Vision NPU is available now for accelerating computer vision applications, such as object detection, OCR, and super resolution. We are currently preparing to launch our second-gen accelerator for LLM and multimodal inference.

Views on Generative AI, open source, and cost efficient compute for inference

Nearly all respondents (96%) said they plan to expand their AI compute infrastructure in 2024 – either on-premises or in the cloud. The survey found that Generative AI was top of mind for many tech company executives, but there are many questions yet to be settled. Many said they were concerned about falling behind in the rush to leverage Generative AI in their products, but even more (nearly a third) said their top Generative AI concern was moving too fast and making mistakes, such as prioritizing the wrong use cases. This suggests that many technologists and tech executives want to expand their AI infra capacity, but haven’t yet formed a concrete plan to do so. If this is correct, it could mean we are still in the early stages of AI infrastructure adoption.

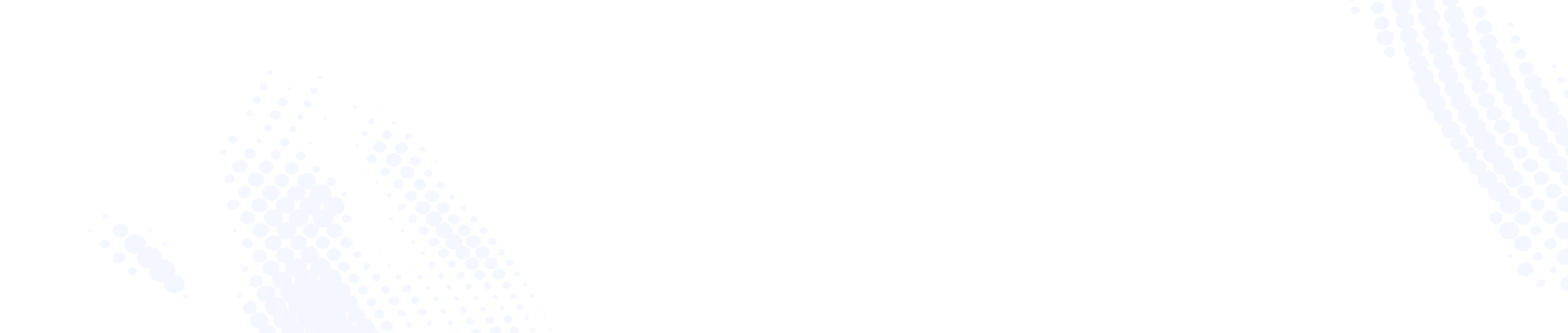

Nearly all executives (95%) who responded to the survey said having and using open-source AI tools and frameworks was important for their organization. Nearly all plan to use open-source tools to customize AI models in 2024. PyTorch was cited by 61% of respondents as their framework of choice, 43% said their preferred framework was TensorFlow, and 16% said Jax. Approximately one third of respondents said they currently use or planned to use CUDA for model customization.

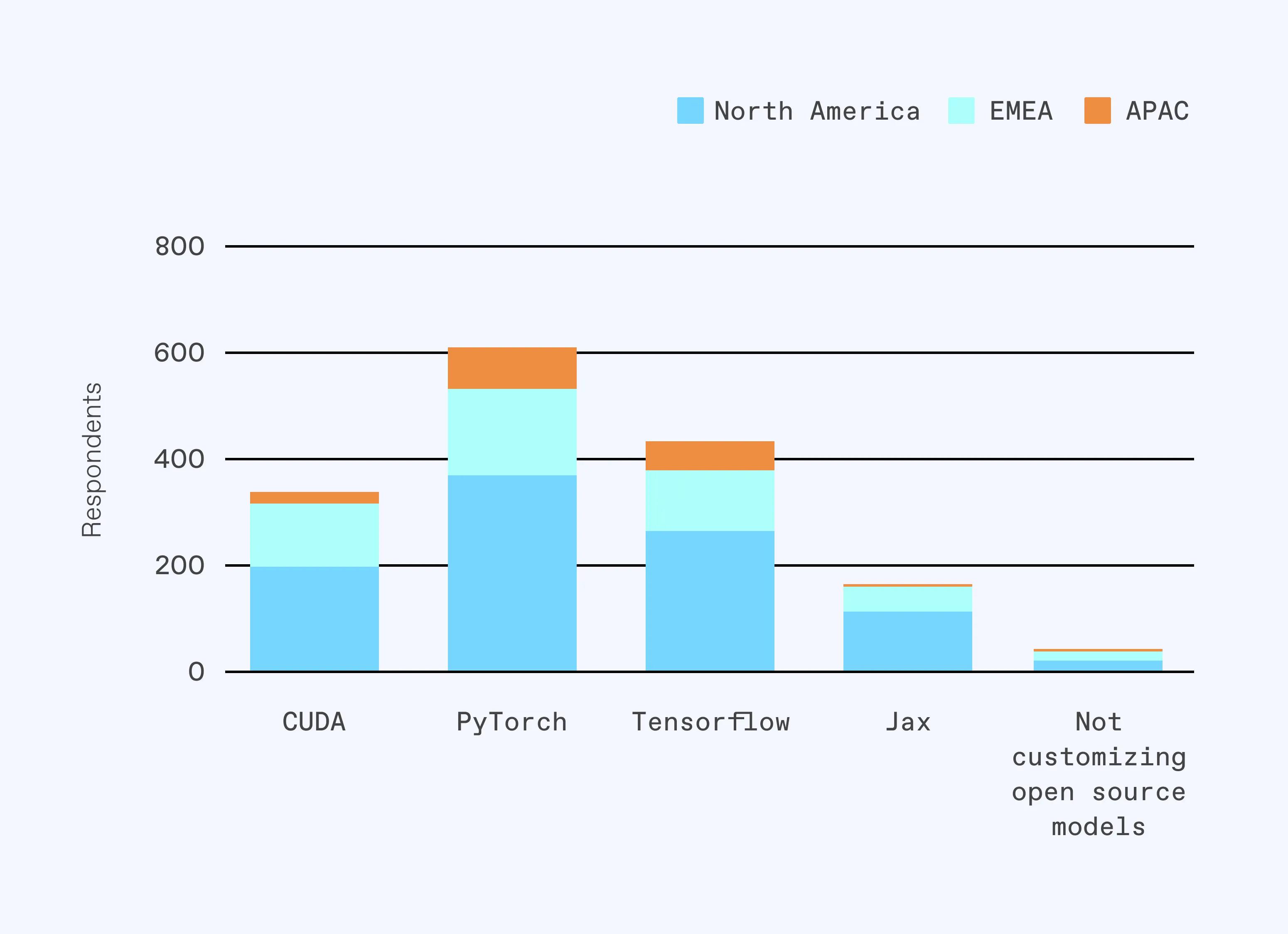

Additional infrastructure for inference is a key need for many technology companies – and cost is a key consideration. More respondents said they were looking for cost-effective alternatives to GPUs for inference (about 52%) than for training (about 27%). And many respondents weren’t yet aware they had other options for AI compute beyond just GPUs.

The survey identified several other common challenges and concerns among those surveyed: Most said they were dissatisfied with their current job scheduling and orchestration tools. Many respondents reported that their business’ current AI hardware was being underutilized.

Finally, the executives surveyed said that, beyond just the cost of new infra compute, their key challenges include latency and power consumption.

We encourage you to download the entire report to learn more about business’ AI infra plans: allocation and scheduling tools, benchmarking, and more.

Building better AI compute

These findings echo the opinions of FuriosaAI’s own customers and partners. We believe there is a significant need for new data center hardware that runs the AI models that businesses need, meets their latency requirements, and substantially reduces their total cost of ownership. With the launch of FuriosaAI’s second-generation chip this year, we look forward to continuing to provide businesses around the world with AI infra solutions that address the needs and concerns identified in this survey.

Written by

The Furiosa Team

.avif)