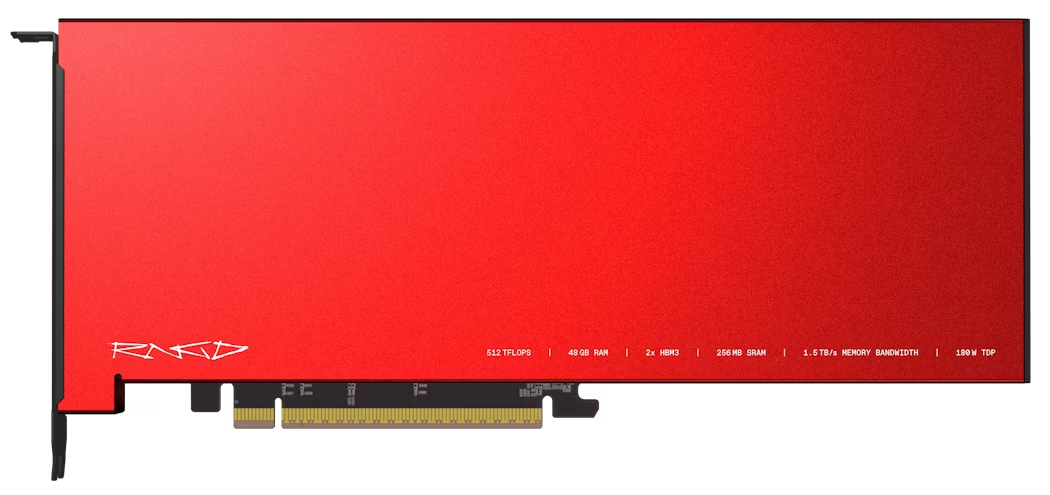

Furiosa RNGD - Gen 2 data center accelerator

Powerfully efficient AI inference for enterprise and cloud

EFFICIENT AI INFERENCE IS HERE

RNGD (pronounced "Renegade") delivers high-performance LLM and multimodal deployment capabilities while maintaining a radically efficient 180W power profile.

512TFLOPS

64TFLOPS (FP8) x 8 processing elements

48GB

HBM3 memory capacity

2 x HBM3

CoWoS-S, 6.0Gbps

256MB SRAM

384TB/s on-chip bandwidth

1.5TB/s

HBM3 memory bandwidth

180W TDP

Targeting air-cooled data centers

PCIe P2P support for LLM BF16, FP8, INT8, INT4 support

Multiple-instance and virtualization Secure boot & model encryption

Tensor contraction, not matmul

Tensor Contraction Processor (TCP)

At the heart of Furiosa RNGD is Tensor Contraction Processor architecture (ISCA 2024), specifically designed for efficient tensor contraction operations.

The fundamental computation of modern day deep learning is tensor contraction, a higher dimensional generalization of matrix multiplication. However, most commercial deep learning accelerators today incorporate fixed-sized matmul instructions as primitives.

RNGD breaks away from that, unlocking powerful performance and efficiency.

The fundamental computation of modern day deep learning is tensor contraction, a higher dimensional generalization of matrix multiplication. However, most commercial deep learning accelerators today incorporate fixed-sized matmul instructions as primitives.

RNGD breaks away from that, unlocking powerful performance and efficiency.

Tensor mapping for max utilization

We elevate the programming interface between hardware and software to treat tensor contraction as a single, unified operation.

This fundamental design choice streamlines programming, maximizing parallelism and data reuse, while providing flexibility and reconfigurability of compute and maximizes memory resources based on tensor shapes.

Furiosa Compiler leverages this flexibility and reconfigurability of hardware to select the most optimized tactics, delivering powerful and efficient deep learning acceleration for all scales of deployment.

This fundamental design choice streamlines programming, maximizing parallelism and data reuse, while providing flexibility and reconfigurability of compute and maximizes memory resources based on tensor shapes.

Furiosa Compiler leverages this flexibility and reconfigurability of hardware to select the most optimized tactics, delivering powerful and efficient deep learning acceleration for all scales of deployment.

Tensor Contraction Processor

TCP is the compute architecture underlying Furiosa accelerators. With tensor operation as the first-class citizen, Tensor Contraction Processor (TCP) unlocks unparalleled energy efficiency.

INFERENCE WITHOUT CONSTRAINTS

Performance

Deploy the most capable models with strong latency and throughput.

Efficiency

Lower total cost of ownership with less energy, fewer racks, and air-cooled data centers of today.

Programmability

Stay future-proof for tomorrow’s models and transition with ease.

SOFTWARE FOR LLM DEPLOYMENT

Furiosa SW Stack consists of a model compressor, serving framework, runtime, compiler, profiler, debugger, and a suite of APIs for ease of programming and deployment.

Built for advanced inference deployment

Comprehensive software toolkit for optimizing large language models on RNGD. User-friendly APIs facilitate seamless state-of-the-art LLM deployment.

Maximizing data center utilization

Ensure higher utilization and flexibility for small and large deployments with containerization, SR-IOV, Kubernetes, as well as other cloud native components.

Robust ecosystem support

Effortlessly deploy models from library to end-user with PyTorch 2.x integration. Leverage the vast advancements of open-source AI and seamlessly transition models into production.

BE IN THE KNOW

Sign up to be notified first about RNGD availability and product updates.

Blog

LG U+ and FuriosaAI unveil the Sovereign AI Appliance

News

LG U+ and FuriosaAI unveil the Sovereign AI Appliance

Secure, Production-Ready Agentic AI: The Furiosa and Helikai Partnership

News

Secure, Production-Ready Agentic AI: The Furiosa and Helikai Partnership

Furiosa SDK 2026.1: Hybrid batching, prefix caching, and native k8s support

Technical Updates

Furiosa SDK 2026.1: Hybrid batching, prefix caching, and native k8s support