Introducing Furiosa NXT RNGD Server: Efficient AI inference at data center scale

News

We are excited to introduce FuriosaAI’s NXT RNGD Server—our first branded, turnkey solution for AI inference.

Built around our RNGD accelerators, NXT RNGD Server is an optimized system that delivers high performance on today’s most important AI workloads while fitting seamlessly into existing data center environments.

With NXT RNGD Server, enterprises can move from experimentation to deployment faster than ever. The system ships with the Furiosa SDK and Furiosa LLM runtime preinstalled, so applications can serve immediately upon installation. We optimized the platform over standard PCIe interconnects, eliminating the need for proprietary fabrics or exotic infrastructure.

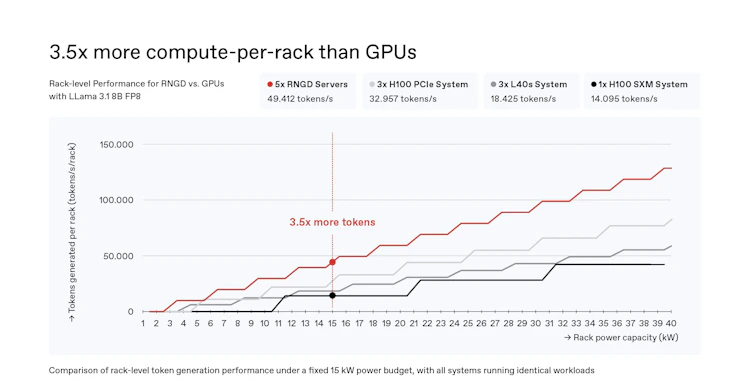

Designed for compatibility, NXT RNGD Server runs at just 3 kW per system, allowing organizations to scale AI within the power and cooling limits of most modern facilities. This makes NXT RNGD Server a practical and cost-effective system to build out AI factories inside the data centers enterprises already operate.

Technical Specifications

Compute: Up to 8 × RNGD accelerators (4 petaFLOPS FP8 per server) with dual AMD EPYC processors. Supports BF16, FP8, INT8, and INT4

Memory: 384 GB HBM3 (12 TB/s bandwidth) plus 1 TB DDR5 system memory

Storage: 2 × 960 GB NVMe M.2 (OS), 2 × 3.84 TB NVMe U.2 (internal)

Networking: 1G management NIC plus 2 × 25G data NICs

Power & Cooling: 3 kW system power, redundant 2,000 W Titanium PSUs, air-cooled

Security & Management: Secure Boot, TPM, BMC attestation, dual management paths (PCIe + I2C)

Software: Preinstalled Furiosa SDK and Furiosa LLM runtime with native Kubernetes and Helm integration

Real-world benefits and proven performance

NXT RNGD Server’s superior power efficiency significantly lowers businesses’ TCO. Enterprise customers can run advanced AI efficiently at scale within current infrastructure and power limitations – using on-prem servers or cloud data centers. This is crucial for leveraging existing infrastructure, since more than 80% of data centers today are air-cooled and operate at 8 kW per rack or less.

FuriosaAI CTO Hanjoon Kim talks with guests at OpenAI's Seoul launch event.

For businesses with sensitive workloads, regulatory compliance requirements, or enhanced privacy and security needs, NXT RNGD Server offers complete control over enterprise data, with model weights running entirely on local infrastructure.

Global enterprises have validated NXT RNGD Server’s performance. In July, LG AI Research announced that it has adopted RNGD for inference computing with its EXAONE models. Running LG’s EXAONE 3.5 32B model on a single server with four RNGD cards and a batch size of one, LG AI Research achieved 60 tokens/second with a 4K context window and 50 tokens/second with a 32K context window.

We are now working with LG AI Research to supply NXT RNGD servers to enterprises using EXAONE across key sectors, including electronics, finance, telecommunications, and biotechnology.

Making rapid deployment of advanced AI available to everyone

With global data center demand at 60 GW in 2024 and expected to triple by the end of the decade, the industry faces a once-in-a-generation transformation. More than 80 percent of facilities today are air-cooled and operate at 8 kW per rack or less, making them poorly suited for GPU-based systems that require liquid cooling and 10 kW+ per server.

NXT RNGD Server provides a practical path forward. It allows organizations to deploy advanced AI within their existing facilities, without prohibitive energy costs or disruptive retrofits. Engineered as a plug-and-play system, NXT RNGD combines AI-optimized silicon with Furiosa LLM, a vLLM-compatible serving framework featuring built-in OpenAI API support, enabling organizations to deploy and scale AI workloads from day one.

By combining silicon and system design, NXT RNGD Server makes efficient, enterprise-ready, and future-proof AI infrastructure a reality.

Availability

We are taking inquiries and orders for January 2026.

Download the datasheet here and sign up for RNGD updates here.