FuriosaAI and OpenAI showcase the future of sustainable enterprise AI

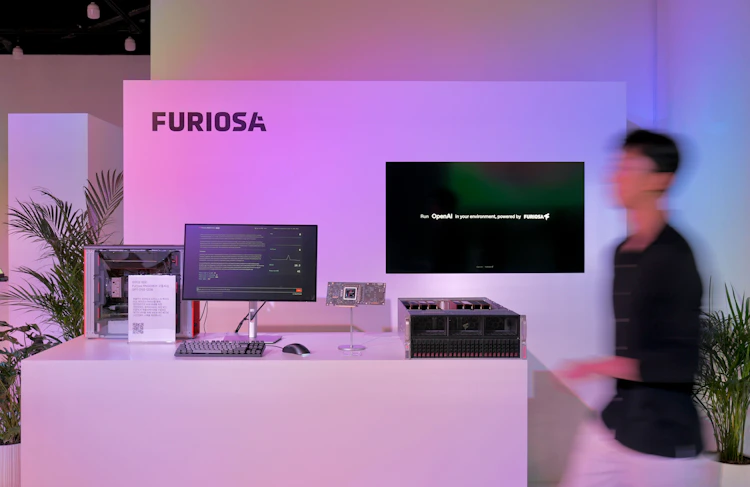

At the grand opening of OpenAI’s new Seoul office today, we are partnering with the world’s biggest AI startup to showcase its new open-weight gpt-oss-120b model running live on RNGD, our flagship AI accelerator. We are excited to represent the foundational compute layer of the AI stack at this milestone event.

The combination of RNGD's performance and power efficiency with advanced new models like gpt-oss will play a critical role in making advanced AI more sustainable and accessible. This collaboration with OpenAI highlights the essential role that AI-native hardware like RNGD plays in building more economically and environmentally sustainable AI for enterprises around the world.

The demonstration at OpenAI’s Seoul office featured a real-time chatbot efficiently running gpt-oss-120b on just two RNGD cards, using MXFP4 precision. This setup, compatible with either a standard workstation or RNGD server, demonstrates that cutting-edge models can be deployed within the existing power budgets of typical data centers. This removes the prohibitive energy costs and complex infrastructure requirements of GPUs, making advanced AI truly accessible for enterprise customers.

Why this matters for enterprises

The combination of OpenAI’s powerful open-weight models and Furiosa’s power-efficient hardware delivers crucial benefits for a wide range of enterprise customers:

- Lower cost of ownership: Run state-of-the-art open-weight models without vendor lock-in or an unsustainable Total Cost of Ownership (TCO).

- Data sovereignty: Quickly deploy and scale high-performance local infrastructure for sensitive workloads with complete control over enterprise data, model weights, enhanced regulatory compliance, and greater security and privacy protections.

- Deploy anywhere: Run advanced AI efficiently at scale within current infrastructure and power limitations – using on-prem servers or cloud data centers.

How RNGD delivers breakthrough efficiency

At the core of RNGD is our Tensor Contraction Processor (TCP) architecture, designed from the ground up to eliminate the inefficiencies of using GPUs for AI applications. This AI-native, software-driven approach maximizes parallelism and data reuse, resulting in world-class performance and radical energy efficiency.

Our technology has been proven in production, with enterprise customers finding that RNGD delivers better performance per watt for LG AI Research’s real-world deployments while also meeting rigorous reliability, performance, and efficiency requirements.

Enterprise customers are now deploying advanced large language models on RNGD — within existing power and cost budgets, and without disruptive changes to their data centers.

Visit furiosa.ai for more information.

Written by

The Furiosa Team

Blog

Secure, Production-Ready Agentic AI: The Furiosa and Helikai Partnership

Furiosa SDK 2026.1: Hybrid batching, prefix caching, and native k8s support

.avif)

RNGD Enters Mass Production: 4,000 High-Performance AI Accelerators Shipped by TSMC