AI agents explained: Core concepts and key capabilities

White Papers

Summary

-

AI agents are emerging as the next evolution of LLMs.

-

Agents surpass the limitations of LLMs by actively perceiving, reasoning, and acting in dynamic environments.

-

Research on Agentic AI is progressing rapidly, and there are many variations that can boost performance further.

-

Agent-based AI requires significantly more inference compute due to complex reasoning and tool use.

-

Furiosa's RNGD accelerator is designed to meet the growing inference demands of the Agentic AI era.

Agents have emerged as a new paradigm in AI. AI agents overcome the key limitations of traditional LLMs and enable more intelligent and autonomous problem-solving systems. This article explores this shift and how it is reshaping the industry’s AI hardware needs.

Unlike conventional LLMs, AI agents perceive dynamically changing external environments, make autonomous decisions, and achieve given objectives. To accomplish this, AI agents require multiple stages of reasoning, the use of numerous models, and inference computation by several to tens of times compared to traditional approaches.

The era of AI agents also means the importance of inference-time compute is increasing. As a result, inference hardware with high computational efficiency and fast processing speeds will only become increasingly important. Furiosa developed RNGD, our flagship AI inference accelerator, to address this need for highly efficient AI hardware.

We’ll talk more in future blog posts about RNGD’s role in power agents, but first it’s important to look at what they are, how they’re different from standard LLMs, and how they achieve improved performance.

The Arrival of the Inference Era: Beyond the Limitations of Training

For several remarkable years, larger datasets combined with more training compute steadily fueled big performance improvements in LLMs. The situation is very different today. Scarcity of new data has become a critical challenge for training larger models, and the cost of training multi-trillion-parameter models is prohibitive even for tech giants. Self-learning techniques and synthetic (AI-generated) data might mitigate the lack of new training data, but research has shown how this approach risks model collapse.

Industry leaders have highlighted these challenges, declaring that “the age of giant AI models is over.”

Other experts have been less dramatic, but no less concerned. The Alan Turing Institute’s Andrew Duncan predicts the supply of public data for model training will likely be depleted by 2026. And he warns that potential additional training data may already be contaminated due to the spread of AI-generated content across the internet.

In this context, agent-based Inference Time Computing is emerging as an important new breakthrough. This approach is gaining attention because it can deliver higher quality responses without increasing model size. And, as detailed below, AI agents employing collaborative strategies have not just demonstrated superior performance on standard LLM benchmarks; they are proving to be more capable and useful in real-world use cases.

From LLMs to Agents: The Natural Evolution of AI

AI agents are not a technology that stands in opposition to Large Language Models (LLMs) but rather one that builds on and complements them. As Microsoft has mentioned, the idea behind agents is not entirely new, but it has become feasible as LLM capabilities have improved.

AI agents go beyond simple question-and-answer interactions; they independently analyze situations, devise plans, and utilize tools to solve problems and achieve a given objective. NVIDIA defines them as “systems that autonomously solve complex, multistep problems through sophisticated reasoning and iterative planning.”

A single LLM excels at language understanding and generation. As an AI tool, however, it has several critical limitations:

Static knowledge base: LLMs only possess knowledge contained in their training dataset, making it difficult for them to utilize real-time or up-to-date information.

Single-turn interactions: Most LLMs respond to each query independently, without fully leveraging the context of previous conversations.

Lack of goal-oriented behavior: LLMs generate direct responses to given inputs but struggle to engage in strategic thinking or long-term planning to achieve specific goals.

To overcome these limitations, AI agents model human problem-solving approaches. As exemplified in NVIDIA’s Agentic AI framework, AI agents implement the following human behaviors:

Perceive: Gather information from the environment and understand the situation.

Reason: Analyze the collected information to develop plans and make decisions.

Act: Execute actions based on the planned strategy.

Learn: Evaluate outcomes and accumulate experience.

This approach closely resembles the iterative and adaptive behavioral patterns humans exhibit when solving complex problems. AI agents actively gather necessary information, evaluate intermediate results, and modify their plans when needed to achieve their objectives.

Understanding AI Agent Architecture: The Three Core Components

AI agents have three key components:

Orchestration module

The orchestration module serves as the central control system or brain of the agent. This module is responsible for analyzing goals, formulating task plans, and monitoring execution. Additionally, the orchestrator coordinates the operations of other agent components, managing the overall problem-solving process.

This includes decision-making by integrating results and insights from various models and tools. For example, instead of relying on a single model for a task, the agent may perform a hybrid search using multiple models, orchestrating and planning actions to achieve the objective.AI models

One or more AI models form the agent’s cognitive and reasoning engine. Each model can be designed for specialized tasks, such as language comprehension, visual processing, or decision-making. These models are dynamically activated according to the plan orchestrated by the orchestration module to perform specific tasks. While an agent may use several specialized models, each one requires much less compute to train than, say, the best LLMs of a year or two ago.Tools

Tools act as interfaces that enable the agent to gather information and interact with the external world.

Using frameworks such as LangChain and AutoGen, agents can perform real-time data retrieval and analysis, conduct computations, and process data. Additionally, they can utilize external services via APIs and access file systems or databases to obtain necessary information. The integration of these diverse tools significantly enhances agents’ problem-solving capabilities.

The real-world benefits of agents are significant. For example, users likely expect an intelligent AI assistant to understand their intent even from simple questions or inputs. Asking, "Can you give me directions to 145 Dosan-daero?" should produce a response like, "FuriosaAI’s office is five miles away. Normally, it takes around 20 minutes by car, but since traffic tends to be heavy during lunchtime. I recommend leaving by 1:20 PM."

To generate such responses, the orchestrator can ask the model to understand the intent of the question and formulate a plan to address it.

The orchestrator can then identify the appropriate tool for the plan and retrieve the necessary information. It can check the user’s schedule or use a map to determine the best route and estimated travel time.

Based on the information it collects, the orchestrator can then ask the model to prepare the final response.

A standard LLM operating in a single-turn manner might simply provide turn-by-turn directions, but this agentic approach is more likely to produce a more useful response.

These components are organically interconnected, allowing the agent to intelligently and autonomously solve problems. Under the guidance of the orchestrator, the models perform reasoning, and the tools facilitate information retrieval and actions.

It is important to note that these components all rely on additional inference-time compute. The orchestrator generates intermediate outputs from the LLM. When the system uses a particular tool, it must generate tokens to call the tool and then use the tool’s output as additional intermediate input to the LLM.

Advanced AI Agent Techniques for Additional Performance Gains

Researchers are rapidly developing new techniques to enhance the performance of agents with more sophisticated reasoning and more effective problem-solving.

Reasoning Techniques

Agents use various reasoning techniques for systematic problem-solving rather than just providing simple responses. In OpenAI’s research, Chain-of-Thought (CoT) prompting significantly enhances an agent’s ability to analyze and solve complex problems step by step. CoT explicitly represents intermediate thought processes while reasoning, similar to how humans often solve problems. For example, when solving a math problem, it follows a logical flow such as: “First, decompose this equation, then substitute this value, and finally perform the calculation to arrive at the answer.”

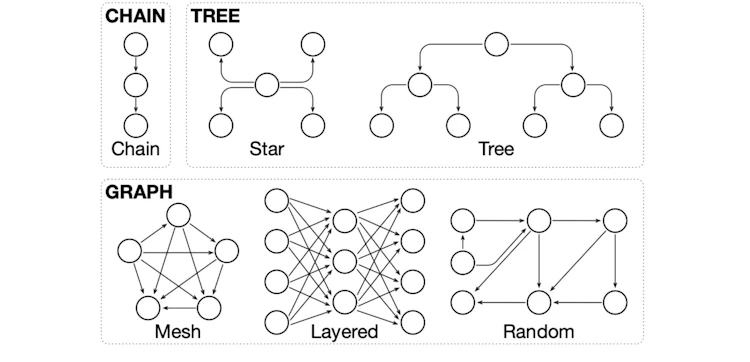

Tree-of-Thoughts (ToT) further advances this concept by exploring multiple potential solution paths simultaneously and identifying the optimal one. Similar to how chess players anticipate several moves ahead, ToT generates and evaluates different choices at each step. These choices branch out like a tree, and the agent continues exploring the most promising paths. This method is particularly effective in scenarios requiring complex reasoning or creative problem-solving.

The improved performance of reasoning models comes at a cost, however. Each intermediate output – the “thoughts” – is itself generated by the LLM. So, a reasoning model might generate Nx more tokens to answer a particular question than an LLM would.

ReAct (Reasoning + Acting) Framework

The ReAct framework, introduced by Princeton University and Google Research, organically integrates an agent’s reasoning and actions. The agent first goes through a “thought” phase, where it analyzes the current situation and plans the next steps. It then moves on to the “action” phase, executing specific actions based on the plan. After that, the agent enters the “observation” phase, where it observes the results of its actions. Finally, through the “reflection” phase, the agent adjusts its next steps based on the observed outcomes. By repeating this cyclical process, the agent effectively solves complex problems.

As we saw with reasoning models, these steps also take the form of additional inference compute.

Multi-Agent Systems

Multi-Agent systems, where multiple agents collaborate, can solve complex problems more effectively. Each agent specializes in a specific domain, allowing them to divide and address complex tasks through cooperation. Additionally, multiple agents can verify each other’s results, increasing the reliability of the final output. Furthermore, by performing tasks simultaneously through parallel processing, multi-agent systems can enhance overall processing efficiency.

Tsinghua University’s research proposes that different multi-agent topologies can yield better results in various domains. Their research demonstrates that multi-agent systems can outperform single-agent approaches.

As detailed in the paper More Agents Is All You Need, the multi-inference process undergoes multiple intermediate validation and correction steps, substantially improving the system’s accuracy.

Of course, each LLM in these systems generates additional tokens – and uses inference compute to do so.

Deep thinking

A recent paper from Microsoft Research demonstrates that “deep thinking” – leveraging multiple rounds of inference – enables smaller language models to compete with or even surpass larger models like OpenAI's o1 in mathematical reasoning. Using the Phi3-mini-3.8B model, the team significantly improved performance on the MATH benchmark, from 41.4% in a single inference to 86.4% through inference-time computation. Unlike Chain of Thought approaches, deep thinking is more specialized for mathematical problem-solving. For example, it can invoke one model to solve a particular problem and another, separate model to validate each step and ensure the correctness of the reasoning process.

Chain of Verification

Meta AI’s experiment proposes addressing LLMs’ hallucination problems through a process called Chain-of-Verification. This involves generating additional verifiable questions based on the initial response, and then confirming the system’s answers through further inference. This method is similar to how a court cross-examines a witness by asking multiple questions from different angles.

While this approach requires two to three times more inference computation than single-model one-shot inference, the study demonstrates it can effectively reduce hallucinations in LLMs.

Each of these technologies can enhance agents’ performance. For example, when asking an agent to generate a report, CoT/ToT provides a structured problem-solving process, while the ReAct approach manages the iterative execution cycle from data collection to report writing.

Through Tool Calling, each agent can utilize specialized tools for data analysis, document drafting, and quality review. Moreover, multi-agent collaboration allows for mutual verification.

The combination of advanced AI agent techniques goes beyond simple task execution, enabling intelligent and adaptive problem-solving. By explicitly reasoning through its process, the agent is more reliable and facilitates human intervention when necessary.

A New Era Brings a New Challenge for AI Hardware

While agents can outperform traditional LLMs and reduce hallucinations, they inevitably require more inference. This is because, in the problem-solving process, agents continuously analyze situations, formulate plans, and evaluate results through iterative reasoning.

This means that agents require more inference compute than the LLM-only systems that preceded them. A recent Market.us analysis projects that the AI inference market will grow at a CAGR of 18.45% from 2023 to 2030, with hardware expected to account for 60% of this market.

Today, the AI hardware market is dominated by GPUs, which are general-purpose processors capable of handling not just AI training and inference, but also graphics processing and other workloads. However, this versatility inevitably leads to efficiency trade-offs.

To overcome these limitations, Furiosa has developed the Tensor Contraction Processor (TCP), a neural processing unit (NPU) architecture optimized specifically for inference.

Using the TCP architecture, we developed RNGD, a power-efficient inference accelerator for data centers. We have been sampling RNGD with customers and ramping up production since we debuted the chip in August 2024.

Building Hardware for the Agentic AI World

It is difficult to predict exactly what demands future Agentic AI systems will place on hardware. The history of LLM development has seen shifts to larger models (from the 1.5B-parameter GPT-2 to the 175B-parameter GPT-3, for example) but also the emergence of smaller, more efficient model architectures (with the original 13B version of Llama outperforming GPT-3, for example).

As we will see in upcoming blog posts, RNGD was built with the versatility to power a wide range of model sizes and architectures. While the details of future Agentic AI systems have yet to be determined, we can be confident that they will rely heavily on inference compute and that RNGD will be an effective, efficient way to provide that compute.

Written by:

Donggun Kim, Head of Product

Heeju Kim, Algorithm Team Engineer

Minjae Lee, Algorithm Team Engineer

Jinwook Bok, Platform Team Engineer

Jihoon Yoon, Product Manager