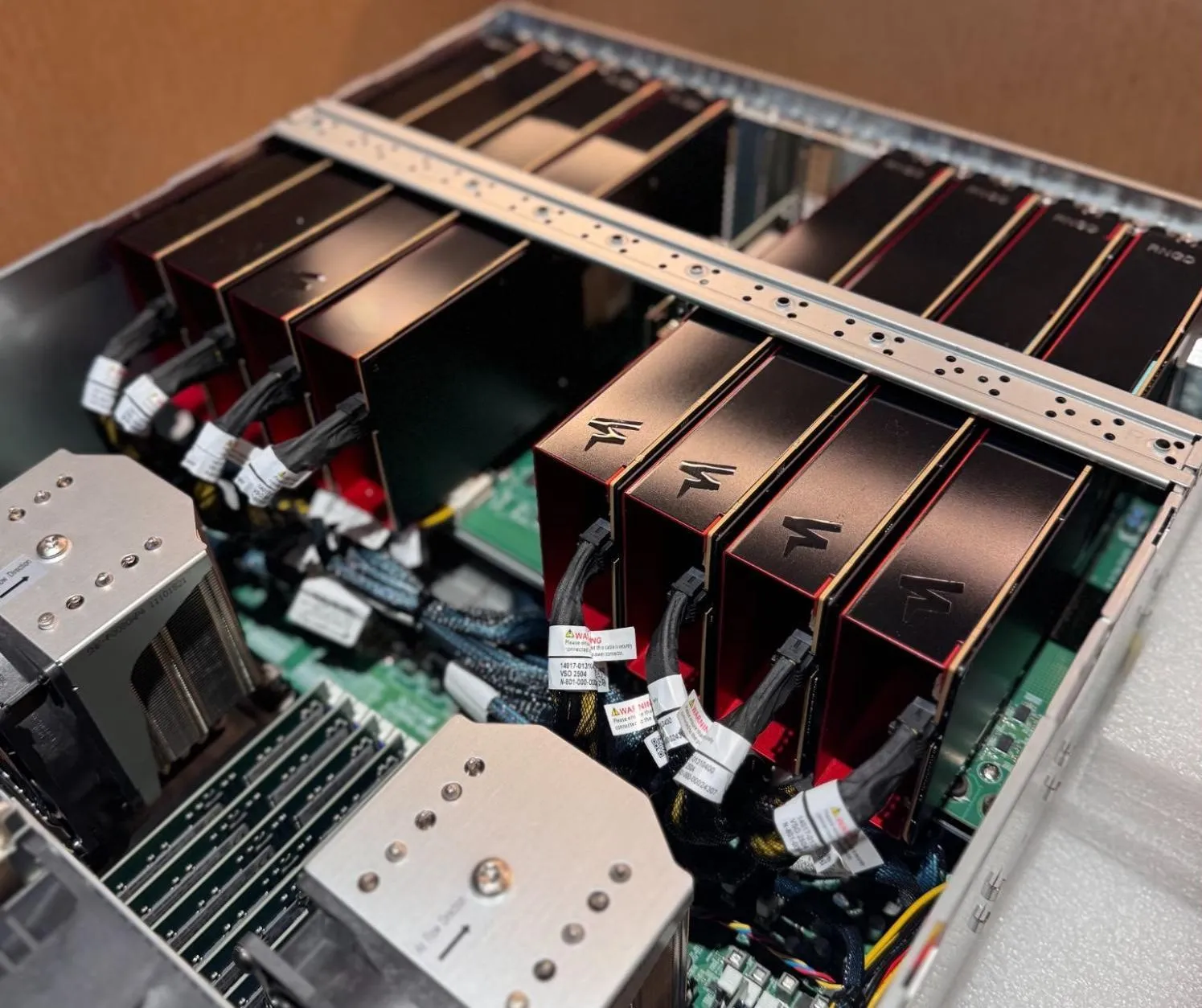

To meet growing demand from global enterprise customers for our flagship AI chip, RNGD (pronounced “Renegade”), and to accelerate our roadmap, FuriosaAI has closed a $125 million Series C funding round. This brings our total funding to $246 million.

This new capital investment – with participation from Korea Development Bank, Industrial Bank of Korea, Keistone Partners, PI Partners, and Kakao Investment – positions us to make significant strides toward achieving our mission.

As one of our partners, Kakao Investment CEO Do-Young Kim, noted: "FuriosaAI has built a compelling alternative to GPUs for AI inference. Their Tensor Contraction Processor chip architecture delivers the performance and power efficiency that is critical for the next wave of AI applications. They have the right technology and the right team to transform AI hardware, and we are excited to help fuel their growth."

A new approach, validated by a leading global enterprise customer

Furiosa’s mission is to make AI computing sustainable. While the demand for AI compute is exploding, GPUs are too power-hungry to scale effectively and economically. This broken model limits AI's true potential.

Our technology offers a better way forward. Earlier this month, LG AI Research announced it has adopted RNGD to run its EXAONE foundation models. In LG’s testing, RNGD delivered 2.25x better performance-per-watt for LLM inference compared to legacy GPUs. This validates RNGD’s enterprise readiness and represents a milestone: one of the first major on-premises enterprise adoptions of inference hardware from a semiconductor startup.

RNGD’s breakthrough performance comes from its innovative Tensor Contraction Processor (TCP) architecture. The fundamental challenge in AI hardware isn't just raw compute power, but using that compute efficiently to deliver excellent real-world performance across many different models and deployment scenarios.

We co-designed our TCP hardware and software stack specifically to work with tensor contraction—the native language of deep learning computations. This gives the compiler a higher-level view to create an efficient custom dataflow path for each computation, which minimizes wasteful data movement.

The result: RNGD delivers a dramatically lower total cost of ownership, more flexible deployments, and faster scaling.

“The world urgently needs new solutions to scale AI compute, and we believe Furiosa is one of the very few companies with the proven architecture innovation and software maturity to deliver,” said PI Partners CEO Yoon D. Kang. “With the success of Furiosa’s RNGD chip and significant design win with LG AI Research, June and his leadership team have demonstrated their ability to deliver on their audacious vision for transforming AI computing. We are thrilled to deepen our partnership as they enter their next phase of growth.”

Accelerating our work to reshape AI compute

The new funding we are announcing today will be put to immediate use in two key areas:

- Scaling RNGD production and accelerating our go-to-market: We will accelerate manufacturing to support our growing list of global customers. We look forward to announcing additional global customers and more design wins soon.

- Developing our next-generation chip: We are making a bold bet to ensure our next chip is the best solution for tomorrow’s agentic AI systems and reasoning models. When we designed RNGD, we looked beyond the BERT-sized models of the time, and similarly, we are now designing our next chip to push the boundaries of high-performance, ultra-efficient AI compute even further.

“LG AI Research's adoption of RNGD has validated our chip’s performance and superior energy efficiency,” said Furiosa CEO June Paik. “Now we are scaling RNGD production globally to meet growing customer demand and applying learnings in the development of our next-generation chip.”

To execute on our ambitious goals, we’re thrilled to welcome two world-class engineering leaders to our executive team:

- Jeehoon Kang, Chief Research Officer: A renowned expert in parallel systems from KAIST, Jeehoon joins us with multiple Distinguished Paper Awards from top conferences like PLDI and POPL. He will lead the design of our next-generation compiler and software architecture.

- Youngjin Cho, Vice President of Hardware: A leading silicon and SoC expert, Youngjin was previously a Corporate Vice President at Samsung Electronics and a Visiting Scholar at Stanford University. He will accelerate development of our next-generation chip.

With a clear roadmap, visionary investors, a growing technical leadership team, and significant customer momentum, we are more confident than ever that our team’s hard work will deliver a new, sustainable foundation for AI.

Written by

The Furiosa Team

.avif)