Furiosa presents papers about improving AI performance at ICML 2025 and ACL 2025

News

As powerful as today’s language models are, there are many promising research areas with the potential to make these systems more efficient, more able to master complex reasoning in new domains, and better at adapting to specialized tasks without retraining from scratch.

Last month, we presented four papers at ICML 2025 in Vancouver and two papers at ACL 2025 in Vienna. These six papers were authored by our engineers and researchers, with collaborators from Korea University, Seoul National University, University of Wisconsin-Madison, Ajou University, UC Berkeley, UC San Francisco, ICSI, LBNL, Microsoft’s Gray Systems Lab, and University of Lisbon.

This work focuses on three main areas, each of which could eventually help businesses derive more benefits from models running on our high-performance inference accelerator, RNGD (pronounced “renegade”), as well as future FuriosaAI products:

More efficient: Novel methods to accelerate large model inference, slash computational costs, and automate the complex process of parallelizing models across devices

More capable: Research that pushes models to think more flexibly, enabling them to apply sophisticated reasoning not just to familiar problems like mathematics, but to diverse domains like law

More flexible: State-of-the-art techniques to efficiently fine-tune new architectures like State Space Models (SSMs), making it far easier for developers to customize them

Below is a quick summary of each paper.

Parameter-Efficient Fine-Tuning of State Space Models

Deep State Space Models (SSMs), such as Mamba, have become powerful tools for language modeling, offering high performance and linear scalability with sequence length. However, the application of parameter-efficient fine-tuning (PEFT) methods to SSM-based models remains largely underexplored. We start by investigating two fundamental questions on existing PEFT methods: (i) How do they perform on SSM-based models? (ii) Which parameters should they target for optimal results?

Our analysis shows that Low-Rank Adaptation (LoRA) and its variants consistently outperform all other PEFT methods. While LoRA is effective for linear projection matrices, it fails on SSM modules and yet still outperforms other methods applicable to SSMs, indicating their limitations. This underscores the need for a specialized SSM tuning approach. To address this, we propose Sparse Dimension Tuning (SDT), a PEFT method tailored for SSM modules. Combining SDT for SSMs with LoRA for linear projection matrices, we achieve state-of-the-art performance across extensive experiments.

VersaPRM: Multi-Domain Process Reward Model via Synthetic Reasoning Data

Process Reward Models (PRMs) have proven effective at enhancing mathematical reasoning for Large Language Models (LLMs) by leveraging increased inference-time computation. However, they are predominantly trained on mathematical data and their generalizability to non-mathematical domains has not been rigorously studied. In response, this work first shows that current PRMs have poor performance in other domains.

To address this limitation, we introduce VersaPRM, a multi-domain PRM trained on synthetic reasoning data generated using our novel data generation and annotation method. VersaPRM achieves consistent performance gains across diverse domains. For instance, in the MMLU-Pro category of Law, VersaPRM via weighted majority voting achieves a 7.9% performance gain over the majority voting baseline, surpassing Qwen2.5-Math-PRM's gain of 1.3%.

TabFlex: Scaling Tabular Learning to Millions with Linear Attention

Leveraging the in-context learning (ICL) capability of Large Language Models (LLMs) for tabular classification has gained significant attention for its training-free adaptability across diverse datasets. Recent advancements, like TabPFN, excel in small-scale tabular datasets but struggle to scale for large and complex datasets.

Our work enhances the efficiency and scalability of TabPFN for larger datasets by incorporating linear attention mechanisms as a scalable alternative to complexity-quadratic self-attention. Our model, TabFlex, efficiently handles tabular datasets with thousands of features and hundreds of classes, scaling seamlessly to millions of samples.

Pfeife: Automatic Pipeline Parallelism for PyTorch

Pfeife is a new algorithm that makes models run faster by parallelizing their execution automatically. Larger models don't fit on a single device, so it's necessary to somehow split them across devices. Even if they fit, it can be useful to split them to improve performance.

There are several ways to parallelize a model, including data parallelism (DP), tensor parallelism (TP), and pipeline parallelism (PP). Often, all techniques are combined to form so-called 3D parallelism.

Pfeife automates PP. Although there are other tools that implement PP, they are not automatic: model developers have to identify the split points manually. Conversely, Pfeife requires zero manual work. It identifies the best split points for a particular hardware setup, taking into account the performance characteristics of each device and the interconnect between them.

Moreover, we show that uneven distribution of work typically improves performance, in contrast with the popular belief that work must be balanced across devices. The key observation is that one needs to balance both computation and communication at the same time to obtain the optimal setup. Pfeife finds these unbalanced schedules automatically, outperforming Microsoft's DeepSpeed by up to 22%.

State-offset Tuning: State-based Parameter-Efficient Fine-Tuning for State Space Models

In this paper, we examine the limitations of prompt-based parameter-efficient fine-tuning (PEFT), including prompt tuning and prefix-tuning, for deep state space models (SSMs). We find these approaches are particularly ineffective as sequence length grows, because SSMs, like recurrent neural networks (RNNs), tend to forget early tokens.

To address this, we introduce State-Offset Tuning, which learns a bias vector added to the SSM’s output at every time step to mitigate forgetting. On Mamba and Mamba-2, state-offset tuning consistently outperforms prompt-based methods and other popular PEFT techniques such as LoRA under comparable parameter budgets, achieving higher accuracy and setting a new state of the art for SSMs.

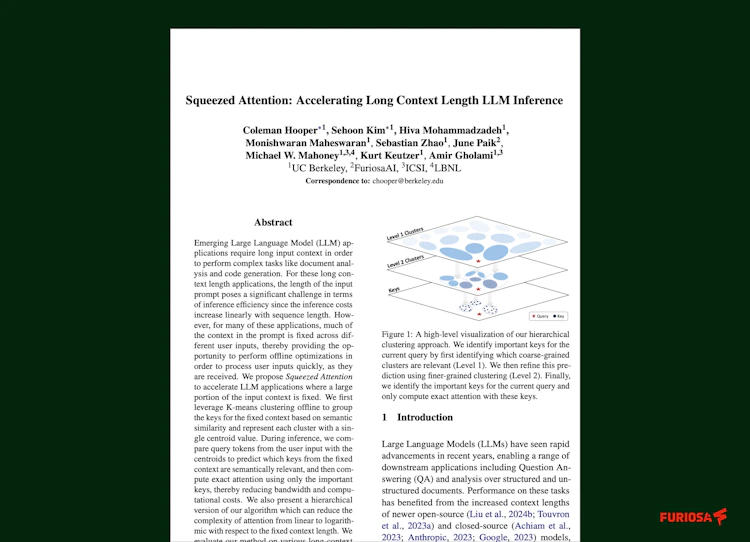

Squeezed Attention: Accelerating Long Context Length LLM Inference

Transformer-based LLMs become inefficient as context length grows: memory scales linearly and compute scales quadratically with sequence length. Yet many workloads reuse a large, fixed portion of the prompt across queries (e.g., multi-question QA over a shared document or code generation over a common codebase).

We introduce Squeezed Attention, which compresses fixed context by clustering its attention keys offline. At inference time, queries are compared against cluster centroids to select relevant clusters. The system then loads only the corresponding keys and computes attention over them. This reduces memory footprint and I/O bandwidth. We further propose a hierarchical variant that reduces attention complexity from linear to logarithmic in the fixed-context length.

On LongBench, Squeezed Attention reduces the KV cache budget by 3.1 times with no noticeable accuracy loss. We also provide optimized kernels for centroid comparison and sparse FlashAttention over the selected keys, achieving up to a 4-fold speed-up.

Dive in for more details by reading the papers

Contributing to prestigious conferences like ICML and ACL shows we remain grounded in first principles and aligned with the needs of cutting-edge and rapidly changing AI workloads. Please take some time to read our papers to get a better understanding.

Here are the four papers we presented at ICML 2025:

VersaPRM: Multi-Domain Process Reward Model via Synthetic Reasoning Data

TabFlex: Scaling Tabular Learning to Millions with Linear Attention

Here are the two papers we presented at ACL 2025:

State-offset Tuning: State-based Parameter-Efficient Fine-Tuning for State Space Models

Squeezed Attention: Accelerating Long Context Length LLM Inference

Thank you to our team and all our collaborators. It was an honor presenting at such prestigious conferences, and we’re looking forward to returning to both next year.